My technical design is flawless

It is a well known fact that developers tend to consider their product as their perfect creation. Thus, it is very difficult for them to understand that there might be scenarios that can occur, although not explicitly specified in requirements. The next sections show why introducing failover mechanisms does not mean that we accept that the code is not perfect, but instead demonstrates full control and knowledge of the working environment.

The requirements are not perfect

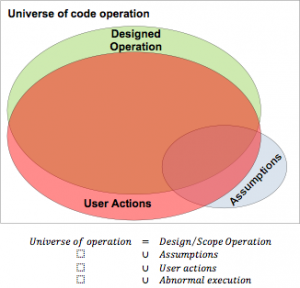

Do perfect requirements exist? Perfect requirements are the ones that fully capture the user needs by detailing each working scenario, providing examples and providing the criteria were the client says it is acceptable to release. However, in the real world perfect requirements are never produced, due to restrictions[1] leading the requirements to partially define the universe were a piece of code will be used.

Introducing failover mechanisms is a way to compensate for the lack of perfect requirements and deliver a more reliable and robust solution. When a reliable solution is delivered that can handle minor diversions to the original design, it shows a better understanding of the working environment of the code produced.

Client expectations are never formalised

Another lack in requirements is that client expectations are never formalised but are left hidden in the client’s mind. Client expectations are the success measuring tool for any project. Thus, it is important that anything delivered during the project meets[2] the client expectations. Failover mechanisms help to improve client expectations by having a system that, although it cannot react immediately to unexpected inputs, it allows the developer to analyse the unexpected behaviour and implement[3] the solution for future similar inputs.

Channelled communication

Earlier, it was mentioned that restrictions exist outside the developer control, like disk space and memory restrictions. Another restriction is the reliance of networking channels that tend to get unreliable from time to time. Due to the wear and tear, load on the channel, and more, one needs to ensure that any data that needs to be transmitted can be retransmitted when problems occur[4]. Failover mechanisms can be designed to ensure that when a channel is experiencing problems, the data that needs to be sent over the channel is not lost but maybe queued or stored until the channel is available again and the data can be transmitted. It is important to understand that even retrieving of data can make use failovers where the data being retrieved cannot be fetched from the main channel an alternative source can be utilised.

[1] The restrictions implied here are project restrictions, such as time, cost, effort, and sometimes for the lack of knowledge on the client side of what is really required. Ted Hardy in one of his articles wrote “Don’t tell me it can’t be done; I know it can. Yes, it could take a server the size of the moon, more money that Scrooge McDuck has in his vault and a project duration that couldn’t be completed before the Sun runs out of hydrogen, but it could be done.” (http://www.betterprojects.net/2011/07/bas-rules-to-software-developers.html), Ted is right in his claim since requirements gathering normally apply the Church-Turing Conjecture which states that a Turing machine can perform any algorithmically computable operation. However, developers on the other hand know that a Turing machine in its completeness doesn’t exist since computers have limitations of disk and memory space, and although time is infinite, operations need to return something back to the user in his life-time. Therefore, developers tend to consider P- and NP- problems that exceed real life restrictions and NP-Complete problems as solutions that cannot be implemented. The reason is that although a Von Neumann architecture computer (traditional PC) can solve the algorithmically presented problem, since it is an implementation of a Turing machine, the time and resources required cannot be pre-determined or possibly attainable for traditional digital application.

[2] It must be stressed out that it is very rare that client expectations are exceeded. Clients tend to expect that whatever they perform results in what they have in mind. Unfortunately or fortunately, as reposted in The Economist Issue of October 29th – November 4th 2011, mind-reading systems are not yet available, therefore mechanisms to capture and limit the impact of unexpected behaviour from the system increases the client’s acceptance of unexpected behaviours.

Sections:

- Introduction

- My technical design is flawless

- Why I lost my work?

- Conclusion